LM Studio 0.3.14: Multi-GPU Controls 🎛️

LM Studio 0.3.14 has new controls for setups with 2+ GPUs

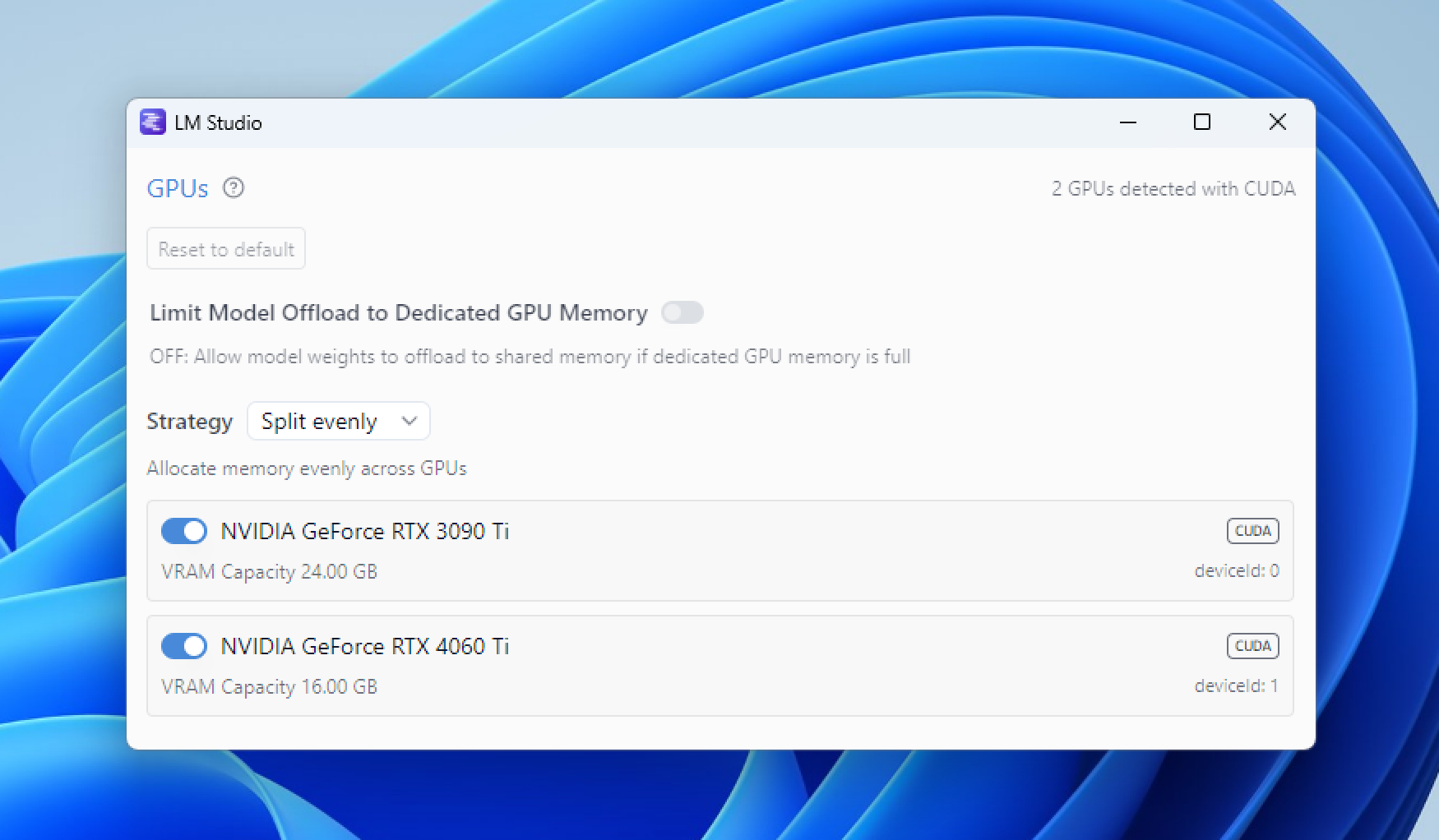

LM Studio 0.3.14 introduces new granular controls for multi-GPU setups. Among the new features are: the ability to enable/disable specific GPUs, choose allocation strategies (evenly, priority order), and limit model weights to dedicated GPU memory.

Upgrade via in-app update, or from https://lmstudio.ai/download.

GPU Bourgeoisie 🎩

If you have more than 1 GPU in your system, you might not be considered "GPU poor" anymore. But with great power comes great responsibility. You need to manage your GPUs wisely to get the best performance out of them.

In LM Studio 0.3.14 we introduce new knobs to help you manage your GPU resources better and more intentionally. Some of these new features are only available for NVIDIA GPUs. We are actively working to bring them for AMD GPUs as well.

Multi-GPU Controls

Use Ctrl+Shift+Alt+H to pop them out in a new window.

To open the GPU controls, press Ctrl+Shift+H on Windows or Linux, Cmd+Shift+H on Mac. You can also open the GPU controls in a pop-out window by pressing Ctrl+Alt+Shift+H/Cmd+Option+Shift+H.

As we learn more about optimal configurations, we'll aim to add automatic modes in addition to the manual controls. These features are available via LM Studio's GUI, and will be available via the lms CLI in the future.

Enable or Disable Specific GPUs

Use the switch next to each GPU to enable or disable it. Disabling a GPU means LM Studio won't use it. This can be useful if you have a mix of powerful and less powerful GPUs, or if you want to reserve a GPU for other tasks.

The video demonstrates disabling GPU 1 and then loading a model. The model is loaded on GPU 0 only, with no activity on GPU 1.

Disable GPU 1 and load a model on GPU 0 only

Limit Model Offload to Dedicated GPU Memory

Currently CUDA Only

LLMs can be memory-intensive. The components that generally take up the most are the model weights and conversation context buffers. In cases where the model weights are too large to fit in one GPUs dedicated memory, your operating system might allocate memory in Shared GPU Memory. This can slow things down quite a bit.

The video first shows this option OFF, resulting in allocation to both dedicated and shared memory. Then, the option is turned ON, and the model is loaded into dedicated memory only.

Limit model offload to dedicated GPU memory

Model weights in Dedicated GPU Memory

The "Limit Model Offload to Dedicated GPU Memory" mode ensures that model weights are only loaded into the dedicated GPU memory. In case the model weights are too large to fit in the dedicated GPU memory, LM Studio will automatically reduce the GPU offload size to fit the model weights in the dedicated GPU memory and the rest in the system RAM.

From our testing, splitting model weights between dedicated GPU memory and system RAM is faster than using shared GPU memory. If your experience is different, please let us know!

Context may be allocated in Shared GPU Memory

Context buffers may still use shared memory. Suppose a model's weights fit in dedicated GPU memory with some space to spare. The model will run at full speed until the context grows to exceed the remaining dedicated memory. Performance will gradually slow as context overflows into shared memory. This approach enables fast initial performance rather than limiting all context to slower RAM.

Priority Order Mode

Currently CUDA Only

You can now set the priority order of GPUs. What this actually means:

- If you have multiple GPUs, you can set the order in which LM Studio should try to allocate models to GPUs.

- The system will try to allocate more on GPUs listed first. Once the first GPU is full, it will move to the next one in the list, and so on.

The video demonstrates loading multiple models. Notice how LM Studio first fills GPU 0 fully before moving to allocate on GPU 1.

Fill in order mode: Allocate models following your specified GPU priority sequence

Got a setup with 3+ GPUs? We'd love to hear from you!

We are looking for users who can help us test and provide feedback on how the new features work with such setups. If you have a setup (Windows or Linux) with 3+ we'd love to hear from you at [email protected]. Thank you!

0.3.14 - Full Release Notes

**Build 1** - New: GPU Controls 🎛️ - On multi-GPU setups, customize how models are offloaded onto your GPUs - Enable/disable individual GPUs - CUDA-specific features: - "Priority order" mode: The system will try to allocate more on GPUs listed first - "Limit Model Offload to Dedicated GPU memory" mode: The system will limit offload of model weights to dedicated GPU memory and RAM only. Context may still use shared memory - How to open GPU controls: - Windows: `Ctrl+Shift+H` - Mac: `Cmd+Shift+H` - How to open GPU controls in a pop-out window: - Windows: `Ctrl+Alt+Shift+H` - Mac: `Cmd+Option+Shift+H` - Benefit: Manage GPU settings while models are loading - LG AI EXAONE Deep reasoning model support - Improved model loader UI in small window sizes - Improve Llama model family tool call reliability through LM Studio SDK and OpenAI compatible streaming API - [SDK] Added support for GBNF grammar when using structured generation - [SDK/RESTful API] Added support for specifying presets - Fixed a bug where sometimes the last couple fragments of a prediction are lost **Build 2** - Optimized "Limit Model Offload to Dedicated GPU memory" mode in long context situations on single GPU setups - Speculative decoding draft model now respects GPU controls - [CUDA] Fixed a bug where model would crash with message "Invalid device index" - [Windows ARM] Fixed chat with document sometimes not working **Build 3** - [Advanced GPU controls] Fixed a bug where intermediate buffers were being allocated on disabled GPUs - Fixed "OpenSquareBracket !== CloseStatement" bug with Nemotron model - Fixed a bug where Nemotron GGUF model metadata was not being read properly - [Windows] Fixed: Make sure the LM Studio.exe executable is also signed. Should help with anti-virus false positives **Build 4** - [Advanced GPU controls] Allow disabling all GPUs with any engine - [Advanced GPU controls] Fix bug where disabling a GPU would cause incorrect offloading when > 2 gpus - [Advanced GPU controls][CUDA] Improved stability of"Limit Model Offload to Dedicated GPU memory" mode - Added GPU controls logging to "Developer Logs" - Fixed a bug where sometimes editing model config inside the model loader popover does not take effect - Fixed a bug related to renaming state focus on chat cells **Build 5** - [Advanced GPU controls] Enlarge GPU controls pop-out window

Even More

- Download the latest LM Studio app for macOS, Windows, or Linux.

- If you want to use LM Studio at your organization at work, get in touch: LM Studio @ Work

- For discussions and community, join our Discord server.

- New to LM Studio? Head over to the documentation: Docs: Getting Started with LM Studio.